(Gauthier, et. al, 2021): Next generation reservoir computing

Implementation of the paper:

Gauthier, D.J., Bollt, E., Griffith, A. et al. Next generation reservoir computing. Nat Commun 12, 5564 (2021). https://doi.org/10.1038/s41467-021-25801-2

[1]:

import matplotlib.pyplot as plt

import numpy as np

import brainpy as bp

import brainpy.math as bm

bm.enable_x64()

bm.set_dfloat(bm.float64)

[2]:

def plot_weights(Wout, coefs, bias=None):

Wout = np.asarray(Wout)

if bias is not None:

bias = np.asarray(bias)

Wout = np.concatenate([bias.reshape((1, 3)), Wout], axis=0)

coefs.insert(0, 'bias')

x_Wout, y_Wout, z_Wout = Wout[:, 0], Wout[:, 1], Wout[:, 2]

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(131)

ax.grid(axis="y")

ax.set_xlabel("$[W_{out}]_x$")

ax.set_ylabel("Features")

ax.set_yticks(np.arange(len(coefs)))

ax.set_yticklabels(coefs)

ax.barh(np.arange(x_Wout.size), x_Wout)

ax1 = fig.add_subplot(132)

ax1.grid(axis="y")

ax1.set_yticks(np.arange(len(coefs)))

ax1.set_xlabel("$[W_{out}]_y$")

ax1.barh(np.arange(y_Wout.size), y_Wout)

ax2 = fig.add_subplot(133)

ax2.grid(axis="y")

ax2.set_yticks(np.arange(len(coefs)))

ax2.set_xlabel("$[W_{out}]_z$")

ax2.barh(np.arange(z_Wout.size), z_Wout)

plt.show()

Forecasting Lorenz63 strange attractor

[3]:

def get_subset(data, start, end):

res = {'x': data['x'][start: end],

'y': data['y'][start: end],

'z': data['z'][start: end]}

res = bm.hstack([res['x'], res['y'], res['z']])

return res.reshape((1, ) + res.shape)

[4]:

def plot_lorenz(ground_truth, predictions):

fig = plt.figure(figsize=(15, 10))

ax = fig.add_subplot(121, projection='3d')

ax.set_title("Generated attractor")

ax.set_xlabel("$x$")

ax.set_ylabel("$y$")

ax.set_zlabel("$z$")

ax.grid(False)

ax.plot(predictions[:, 0], predictions[:, 1], predictions[:, 2])

ax2 = fig.add_subplot(122, projection='3d')

ax2.set_title("Real attractor")

ax2.grid(False)

ax2.plot(ground_truth[:, 0], ground_truth[:, 1], ground_truth[:, 2])

plt.show()

[5]:

dt = 0.01

t_warmup = 5. # ms

t_train = 10. # ms

t_test = 120. # ms

num_warmup = int(t_warmup / dt) # warm up NVAR

num_train = int(t_train / dt)

num_test = int(t_test / dt)

Datasets

[6]:

lorenz_series = bp.datasets.lorenz_series(t_warmup + t_train + t_test,

dt=dt,

inits={'x': 17.67715816276679,

'y': 12.931379185960404,

'z': 43.91404334248268})

X_warmup = get_subset(lorenz_series, 0, num_warmup - 1)

Y_warmup = get_subset(lorenz_series, 1, num_warmup)

X_train = get_subset(lorenz_series, num_warmup - 1, num_warmup + num_train - 1)

# Target: Lorenz[t] - Lorenz[t - 1]

dX_train = get_subset(lorenz_series, num_warmup, num_warmup + num_train) - X_train

X_test = get_subset(lorenz_series,

num_warmup + num_train - 1,

num_warmup + num_train + num_test - 1)

Y_test = get_subset(lorenz_series,

num_warmup + num_train,

num_warmup + num_train + num_test)

WARNING:absl:No GPU/TPU found, falling back to CPU. (Set TF_CPP_MIN_LOG_LEVEL=0 and rerun for more info.)

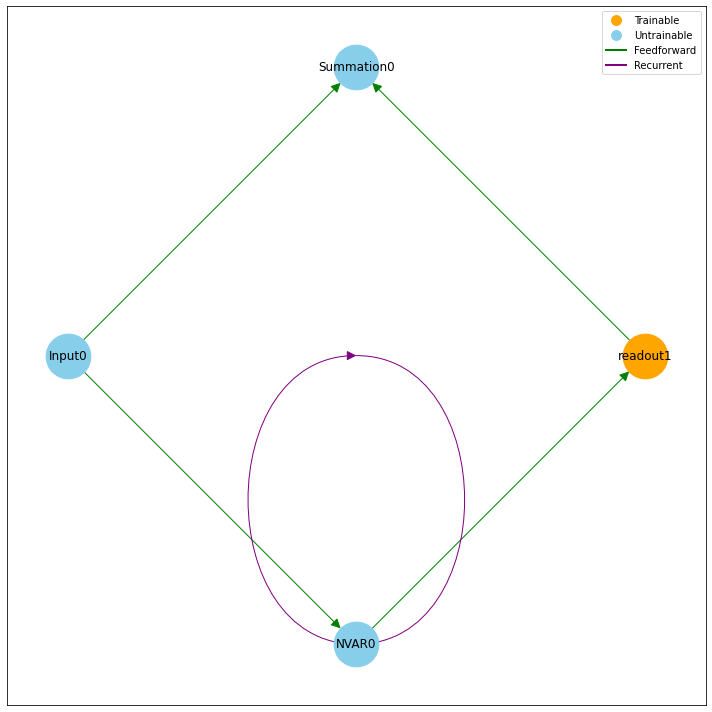

Model

[7]:

i = bp.nn.Input(3)

r = bp.nn.NVAR(delay=2, order=2, constant=True)

di = bp.nn.Dense(3, bias_initializer=None, trainable=True, name='readout1')

o = bp.nn.Summation()

[8]:

model = {i >> r >> di, i} >> o

model.plot_node_graph()

[9]:

model.initialize(num_batch=1)

print(r.get_feature_names())

['1', 'x0(t)', 'x1(t)', 'x2(t)', 'x0(t-1)', 'x1(t-1)', 'x2(t-1)', 'x0(t)^2', 'x0(t) x1(t)', 'x0(t) x2(t)', 'x0(t) x0(t-1)', 'x0(t) x1(t-1)', 'x0(t) x2(t-1)', 'x1(t)^2', 'x1(t) x2(t)', 'x1(t) x0(t-1)', 'x1(t) x1(t-1)', 'x1(t) x2(t-1)', 'x2(t)^2', 'x2(t) x0(t-1)', 'x2(t) x1(t-1)', 'x2(t) x2(t-1)', 'x0(t-1)^2', 'x0(t-1) x1(t-1)', 'x0(t-1) x2(t-1)', 'x1(t-1)^2', 'x1(t-1) x2(t-1)', 'x2(t-1)^2']

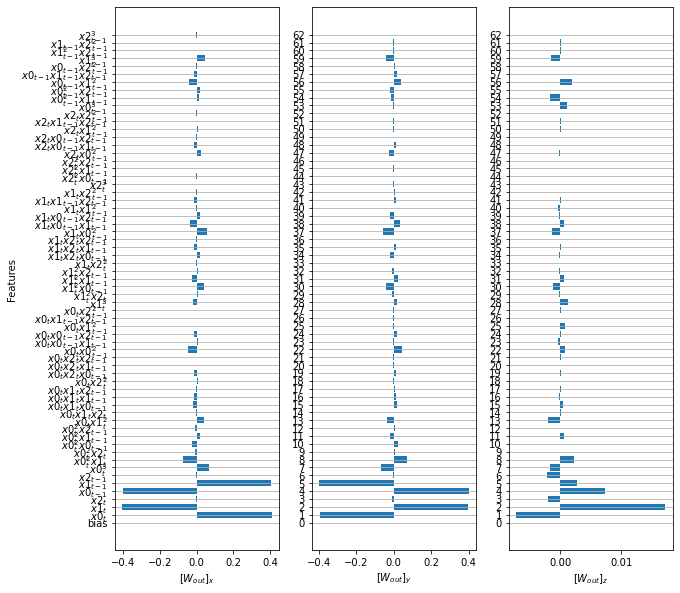

Training

[10]:

# warm-up

trainer = bp.nn.RidgeTrainer(model, beta=2.5e-6)

# training

outputs = trainer.predict(X_warmup)

print('Warmup NMS: ', bp.losses.mean_squared_error(outputs, Y_warmup))

trainer.fit([X_train, {'readout1': dX_train}])

plot_weights(di.Wff, r.get_feature_names_for_plot(), di.bias)

Warmup NMS: 258868.01050249912

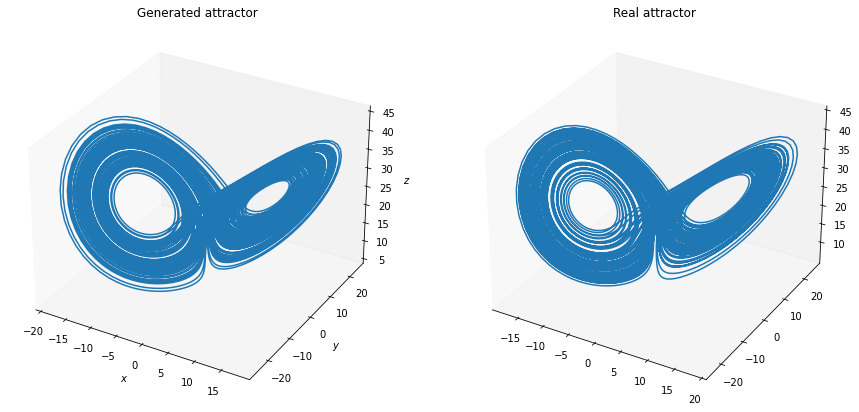

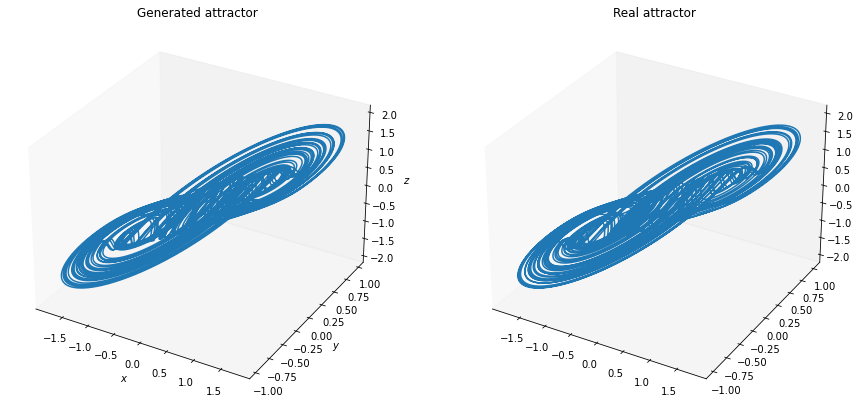

Prediction

[11]:

model = bm.jit(model)

outputs = [model(X_test[:, 0])]

for i in range(1, X_test.shape[1]):

outputs.append(model(outputs[i - 1]))

outputs = bm.asarray(outputs)

print('Prediction NMS: ', bp.losses.mean_squared_error(outputs, Y_test))

plot_lorenz(Y_test.numpy().squeeze(), outputs.numpy().squeeze())

Prediction NMS: 146.9270262956915

Forecasting the double-scroll system

[12]:

def plot_double_scroll(ground_truth, predictions):

fig = plt.figure(figsize=(15, 10))

ax = fig.add_subplot(121, projection='3d')

ax.set_title("Generated attractor")

ax.set_xlabel("$x$")

ax.set_ylabel("$y$")

ax.set_zlabel("$z$")

ax.grid(False)

ax.plot(predictions[:, 0], predictions[:, 1], predictions[:, 2])

ax2 = fig.add_subplot(122, projection='3d')

ax2.set_title("Real attractor")

ax2.grid(False)

ax2.plot(ground_truth[:, 0], ground_truth[:, 1], ground_truth[:, 2])

plt.show()

[13]:

dt = 0.02

t_warmup = 10. # ms

t_train = 100. # ms

t_test = 800. # ms

num_warmup = int(t_warmup / dt) # warm up NVAR

num_train = int(t_train / dt)

num_test = int(t_test / dt)

Datasets

[14]:

data_series = bp.datasets.double_scroll_series(t_warmup + t_train + t_test, dt=dt)

X_warmup = get_subset(data_series, 0, num_warmup - 1)

Y_warmup = get_subset(data_series, 1, num_warmup)

X_train = get_subset(data_series, num_warmup - 1, num_warmup + num_train - 1)

# Target: Lorenz[t] - Lorenz[t - 1]

dX_train = get_subset(data_series, num_warmup, num_warmup + num_train) - X_train

X_test = get_subset(data_series,

num_warmup + num_train - 1,

num_warmup + num_train + num_test - 1)

Y_test = get_subset(data_series,

num_warmup + num_train,

num_warmup + num_train + num_test)

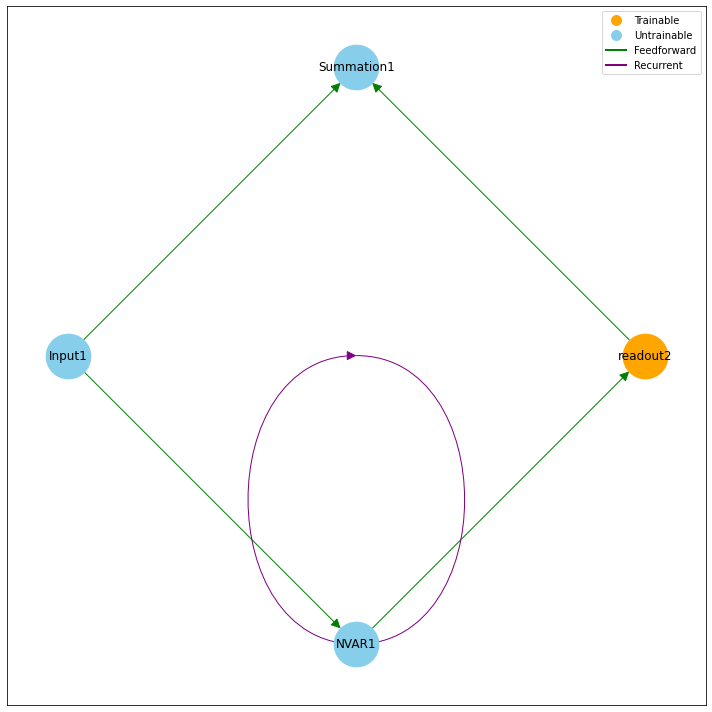

Model

[15]:

i = bp.nn.Input(3)

r = bp.nn.NVAR(delay=2, order=3)

di = bp.nn.Dense(3, trainable=True, name='readout2')

o = bp.nn.Summation()

model = {i >> r >> di, i} >> o

[16]:

model.plot_node_graph()

model.initialize(num_batch=1)

Training

[17]:

# warm-up

trainer = bp.nn.RidgeTrainer(model, beta=1e-5, jit=True)

[18]:

# training

outputs = trainer.predict(X_warmup)

print('Warmup NMS: ', bp.losses.mean_squared_error(outputs, Y_warmup))

trainer.fit([X_train, {'readout2': dX_train}])

plot_weights(di.Wff, r.get_feature_names_for_plot(), di.bias)

Warmup NMS: 6.692040921993058

Prediction

[19]:

model = bm.jit(model)

outputs = [model(X_test[:, 0])]

for i in range(1, X_test.shape[1]):

outputs.append(model(outputs[i - 1]))

outputs = bm.asarray(outputs).squeeze()

print('Prediction NMS: ', bp.losses.mean_squared_error(outputs, Y_test))

plot_double_scroll(Y_test.numpy().squeeze(), outputs.numpy())

Prediction NMS: 1.2801059066825962

Infering dynamics of Lorenz63 strange attractor

[20]:

def get_subset(data, start, end):

res = {'x': data['x'][start: end],

'y': data['y'][start: end],

'z': data['z'][start: end]}

X = bm.hstack([res['x'], res['y']])

X = X.reshape((1,) + X.shape)

Y = res['z']

Y = Y.reshape((1, ) + Y.shape)

return X, Y

[21]:

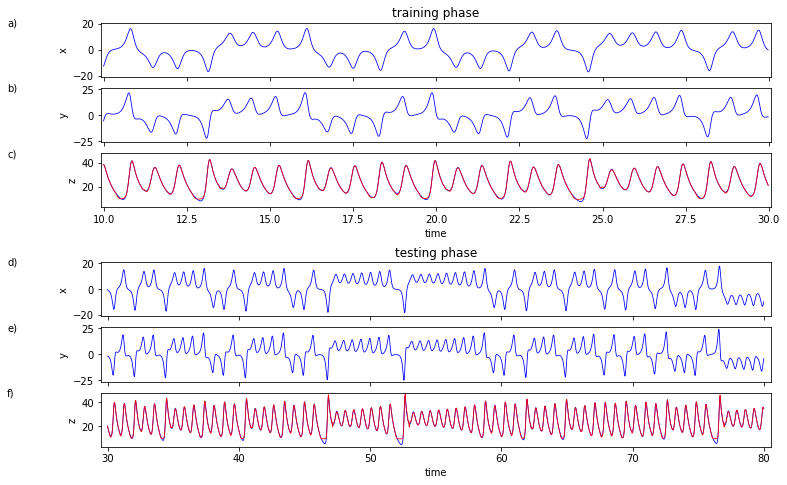

def plot_lorenz2(x, y, true_z, predict_z, linewidth=.8):

fig1 = plt.figure()

fig1.set_figheight(8)

fig1.set_figwidth(12)

t_all = t_warmup + t_train + t_test

ts = np.arange(0, t_all, dt)

h = 240

w = 2

# top left of grid is 0,0

axs1 = plt.subplot2grid(shape=(h, w), loc=(0, 0), colspan=2, rowspan=30)

axs2 = plt.subplot2grid(shape=(h, w), loc=(36, 0), colspan=2, rowspan=30)

axs3 = plt.subplot2grid(shape=(h, w), loc=(72, 0), colspan=2, rowspan=30)

axs4 = plt.subplot2grid(shape=(h, w), loc=(132, 0), colspan=2, rowspan=30)

axs5 = plt.subplot2grid(shape=(h, w), loc=(168, 0), colspan=2, rowspan=30)

axs6 = plt.subplot2grid(shape=(h, w), loc=(204, 0), colspan=2, rowspan=30)

# training phase x

axs1.set_title('training phase')

axs1.plot(ts[num_warmup:num_warmup + num_train],

x[num_warmup:num_warmup + num_train],

color='b', linewidth=linewidth)

axs1.set_ylabel('x')

axs1.axes.xaxis.set_ticklabels([])

axs1.axes.set_xbound(t_warmup - .08, t_warmup + t_train + .05)

axs1.axes.set_ybound(-21., 21.)

axs1.text(-.14, .9, 'a)', ha='left', va='bottom', transform=axs1.transAxes)

# training phase y

axs2.plot(ts[num_warmup:num_warmup + num_train],

y[num_warmup:num_warmup + num_train],

color='b', linewidth=linewidth)

axs2.set_ylabel('y')

axs2.axes.xaxis.set_ticklabels([])

axs2.axes.set_xbound(t_warmup - .08, t_warmup + t_train + .05)

axs2.axes.set_ybound(-26., 26.)

axs2.text(-.14, .9, 'b)', ha='left', va='bottom', transform=axs2.transAxes)

# training phase z

axs3.plot(ts[num_warmup:num_warmup + num_train],

true_z[num_warmup:num_warmup + num_train],

color='b', linewidth=linewidth)

axs3.plot(ts[num_warmup:num_warmup + num_train],

predict_z[num_warmup:num_warmup + num_train],

color='r', linewidth=linewidth)

axs3.set_ylabel('z')

axs3.set_xlabel('time')

axs3.axes.set_xbound(t_warmup - .08, t_warmup + t_train + .05)

axs3.axes.set_ybound(3., 48.)

axs3.text(-.14, .9, 'c)', ha='left', va='bottom', transform=axs3.transAxes)

# testing phase x

axs4.set_title('testing phase')

axs4.plot(ts[num_warmup + num_train:num_warmup + num_train + num_test],

x[num_warmup + num_train:num_warmup + num_train + num_test],

color='b', linewidth=linewidth)

axs4.set_ylabel('x')

axs4.axes.xaxis.set_ticklabels([])

axs4.axes.set_ybound(-21., 21.)

axs4.axes.set_xbound(t_warmup + t_train - .5, t_all + .5)

axs4.text(-.14, .9, 'd)', ha='left', va='bottom', transform=axs4.transAxes)

# testing phase y

axs5.plot(ts[num_warmup + num_train:num_warmup + num_train + num_test],

y[num_warmup + num_train:num_warmup + num_train + num_test],

color='b', linewidth=linewidth)

axs5.set_ylabel('y')

axs5.axes.xaxis.set_ticklabels([])

axs5.axes.set_ybound(-26., 26.)

axs5.axes.set_xbound(t_warmup + t_train - .5, t_all + .5)

axs5.text(-.14, .9, 'e)', ha='left', va='bottom', transform=axs5.transAxes)

# testing phose z

axs6.plot(ts[num_warmup + num_train:num_warmup + num_train + num_test],

true_z[num_warmup + num_train:num_warmup + num_train + num_test],

color='b', linewidth=linewidth)

axs6.plot(ts[num_warmup + num_train:num_warmup + num_train + num_test],

predict_z[num_warmup + num_train:num_warmup + num_train + num_test],

color='r', linewidth=linewidth)

axs6.set_ylabel('z')

axs6.set_xlabel('time')

axs6.axes.set_ybound(3., 48.)

axs6.axes.set_xbound(t_warmup + t_train - .5, t_all + .5)

axs6.text(-.14, .9, 'f)', ha='left', va='bottom', transform=axs6.transAxes)

plt.show()

[22]:

dt = 0.02

t_warmup = 10. # ms

t_train = 20. # ms

t_test = 50. # ms

num_warmup = int(t_warmup / dt) # warm up NVAR

num_train = int(t_train / dt)

num_test = int(t_test / dt)

Datasets

[23]:

lorenz_series = bp.datasets.lorenz_series(t_warmup + t_train + t_test,

dt=dt,

inits={'x': 17.67715816276679,

'y': 12.931379185960404,

'z': 43.91404334248268})

X_warmup, Y_warmup = get_subset(lorenz_series, 0, num_warmup)

X_train, Y_train = get_subset(lorenz_series, num_warmup, num_warmup + num_train)

X_test, Y_test = get_subset(lorenz_series, 0, num_warmup + num_train + num_test)

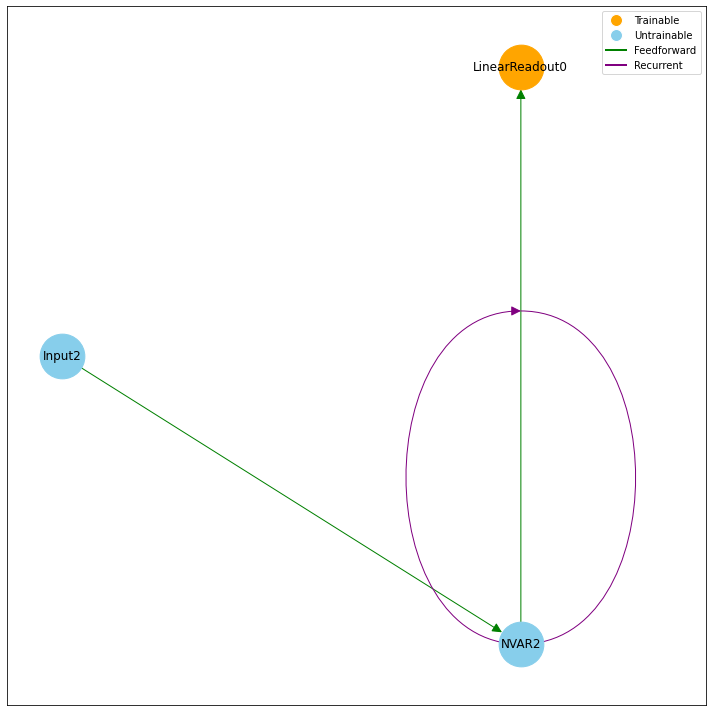

Model

[24]:

i = bp.nn.Input(2)

r = bp.nn.NVAR(delay=4, order=2, stride=5)

o = bp.nn.LinearReadout(1, trainable=True)

model = i >> r >> o

model.plot_node_graph()

model.initialize(num_batch=1)

Training

[25]:

trainer = bp.nn.RidgeTrainer(model, beta=0.05)

# warm-up

outputs = trainer.predict(X_warmup)

print('Warmup NMS: ', bp.losses.mean_squared_error(outputs, Y_warmup))

# training

trainer.fit([X_train, Y_train])

Warmup NMS: 4181.9773498459845

Prediction

[26]:

outputs = trainer.predict(X_test, reset=True)

print('Prediction NMS: ', bp.losses.mean_squared_error(outputs, Y_test))

Prediction NMS: 590.5824824408998

[27]:

plot_lorenz2(x=lorenz_series['x'].flatten().numpy(),

y=lorenz_series['y'].flatten().numpy(),

true_z=lorenz_series['z'].flatten().numpy(),

predict_z=outputs.numpy().flatten())